AI isn’t great at decoding human emotions. So why are regulators targeting the tech?

AI, emotion recognition, and Darwin

This article is from The Technocrat, MIT Technology Review's weekly tech policy newsletter about power, politics, and Silicon Valley. To receive it in your inbox every Friday, sign up here.

Recently, I took myself to one of my favorite places in New York City, the public library, to look at some of the hundreds of original letters, writings, and musings of Charles Darwin. The famous English scientist loved to write, and his curiosity and skill at observation come alive on the pages.

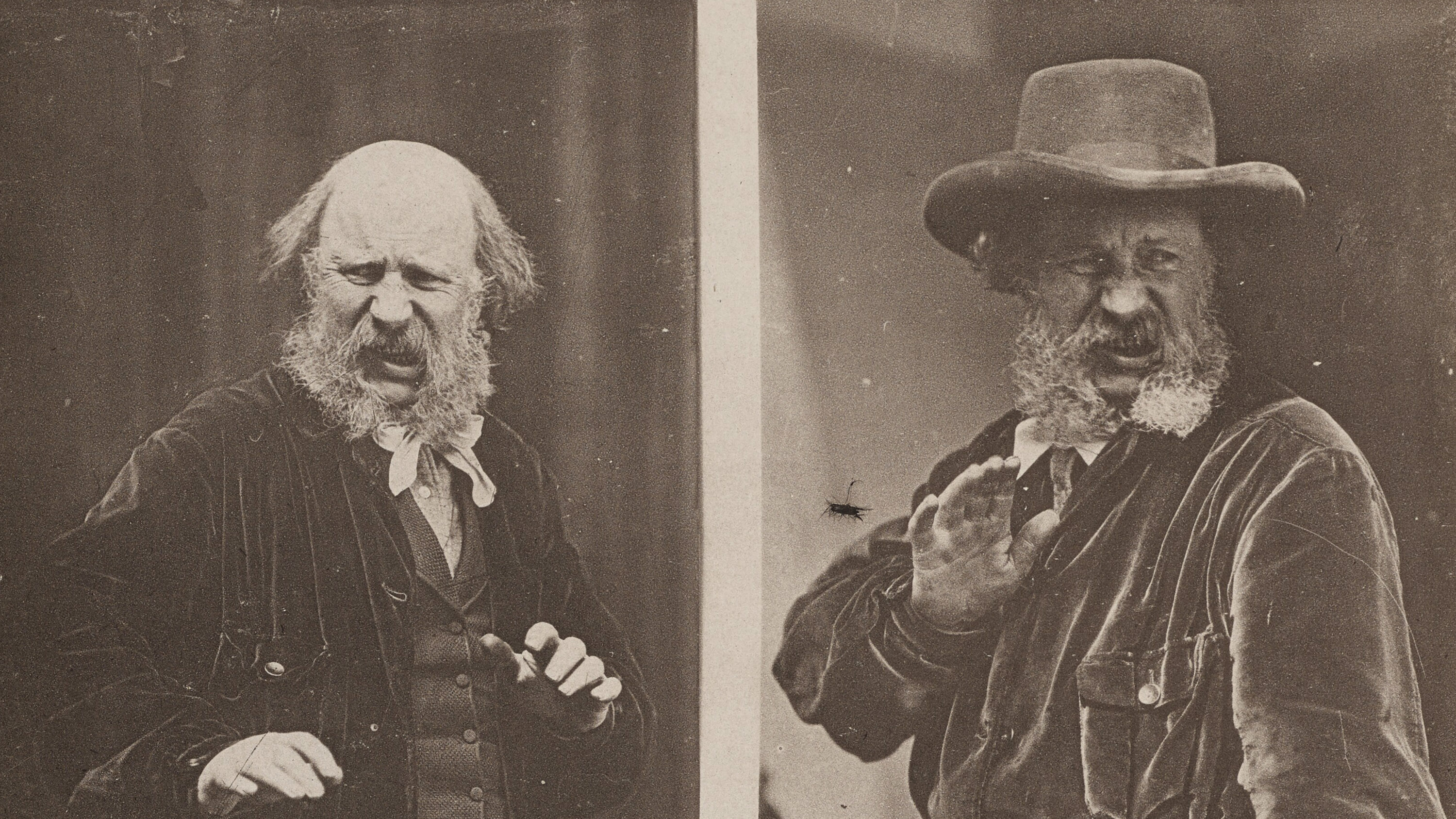

In addition to proposing the theory of evolution, Darwin studied the expressions and emotions of people and animals. He debated in his writing just how scientific, universal, and predictable emotions actually are, and he sketched characters with exaggerated expressions, which the library had on display.

The subject rang a bell for me.

Lately, as everyone has been up in arms about ChatGPT, AI general intelligence, and the prospect of robots taking people’s jobs, I’ve noticed that regulators have been ramping up warnings against AI and emotion recognition.

Emotion recognition, in this far-from-Darwin context, is the attempt to identify a person’s feelings or state of mind using AI analysis of video, facial images, or audio recordings.

The idea isn’t super complicated: the AI model may see an open mouth, squinted eyes, and contracted cheeks with a thrown-back head, for instance, and register it as a laugh, concluding that the subject is happy.

But in practice, this is incredibly complex—and, some argue, a dangerous and invasive example of the sort of pseudoscience that artificial intelligence often produces.

Certain privacy and human rights advocates, such as European Digital Rights and Access Now, are calling for a blanket ban on emotion recognition. And while the version of the EU AI Act that was approved by the European Parliament in June isn’t a total ban, it bars the use of emotion recognition in policing, border management, workplaces, and schools.

Meanwhile, some US legislators have called out this particular field, and it appears to be a likely contender in any eventual AI regulation; Senator Ron Wyden, who is one of the lawmakers leading the regulatory push, recently praised the EU for tackling it and warned, “Your facial expressions, eye movements, tone of voice, and the way you walk are terrible ways to judge who you are or what you’ll do in the future. Yet millions and millions of dollars are being funneled into developing emotion-detection AI based on bunk science.”

But why is this a top concern? How well founded are fears about emotion recognition—and could strict regulation here actually hurt positive innovation?

A handful of companies are already selling this technology for a wide variety of uses, though it's not yet widely deployed. Affectiva, for one, has been exploring how AI that analyzes people’s facial expressions might be used to determine whether a car driver is tired and to evaluate how people are reacting to a movie trailer. Others, like HireVue, have sold emotion recognition as a way to screen for the most promising job candidates (a practice that has been met with heavy criticism; you can listen to our investigative audio series on the company here).

“I’m generally in favor of allowing the private sector to develop this technology. There are important applications, such as enabling people who are blind or have low vision to better understand the emotions of people around them,” Daniel Castro, vice president of the Information Technology and Innovation Foundation, a DC-based think tank, told me in an email.

But other applications of the tech are more alarming. Several companies are selling software to law enforcement that tries to ascertain if someone is lying or that can flag supposedly suspicious behavior.

A pilot project called iBorderCtrl, sponsored by the European Union, offers a version of emotion recognition as part of its technology stack that manages border crossings. According to its website, the Automatic Deception Detection System “quantifies the probability of deceit in interviews by analyzing interviewees’ non-verbal micro-gestures” (though it acknowledges “scientific controversy around its efficacy”).

But the most high-profile use (or abuse, in this case) of emotion recognition tech is from China, and this is undoubtedly on legislators’ radars.

The country has repeatedly used emotion AI for surveillance—notably to monitor Uyghurs in Xinjiang, according to a software engineer who claimed to have installed the systems in police stations. Emotion recognition was intended to identify a nervous or anxious “state of mind,” like a lie detector. As one human rights advocate warned the BBC, “It’s people who are in highly coercive circumstances, under enormous pressure, being understandably nervous, and that's taken as an indication of guilt.” Some schools in the country have also used the tech on students to measure comprehension and performance.

Ella Jakubowska, a senior policy advisor at the Brussels-based organization European Digital Rights, tells me she has yet to hear of “any credible use case” for emotion recognition: “Both [facial recognition and emotion recognition] are about social control; about who watches and who gets watched; about where we see a concentration of power.”

What’s more, there’s evidence that emotion recognition models just can’t be accurate. Emotions are complicated, and even human beings are often quite poor at identifying them in others. Even as the technology has improved in recent years, thanks to the availability of more and better data as well as increased computing power, the accuracy varies widely depending on what outcomes the system is aiming for and how good the data is going into it.

“The technology is not perfect, although that probably has less to do with the limits of computer vision and more to do with the fact that human emotions are complex, vary based on culture and context, and are imprecise,” Castro told me.

Which brings me back to Darwin. A fundamental tension in this field is whether science can ever determine emotions. We might see advances in affective computing as the underlying science of emotion continues to progress—or we might not.

It’s a bit of a parable for this broader moment in AI. The technology is in a period of extreme hype, and the idea that artificial intelligence can make the world significantly more knowable and predictable can be appealing. That said, as AI expert Meredith Broussard has asked, can everything be distilled into a math problem?

What else I’m reading

- Political bias is seeping into AI language models, according to new research that my colleague Melissa Heikkilä reported on this week. Some models are more right-leaning and others are more left-leaning, and a truly unbiased model might be out of reach, some researchers say.

- Steven Lee Myers of the New York Times has a fascinating long read about how Sweden is thwarting targeted online information ops by the Kremlin, which are intended to sow division within the Scandinavian country as it works to join NATO.

- Kate Lindsay wrote a lovely reflection in the Atlantic about the changing nature of death in the digital age. Emails, texts, and social media posts live on long past our loved ones, changing grief and memory. (If you’re curious about this topic, a few months back I wrote about how this shift relates to changes in deletion policies by Google and Twitter.)

What I learned this week

A new study from researchers in Switzerland finds that news is highly valuable to Google Search and accounts for the majority of its revenue. The findings offer some optimism about the economics of news and publishing, especially if you, like me, care deeply about the future of journalism. Courtney Radsch wrote about the study in one of my favorite publications, Tech Policy Press. (On a related note, you should also read this sharp piece on how to fix local news from Steven Waldman in the Atlantic.)

Deep Dive

Policy

Eric Schmidt has a 6-point plan for fighting election misinformation

The former Google CEO hopes that companies, Congress, and regulators will take his advice on board—before it’s too late.

A high school’s deepfake porn scandal is pushing US lawmakers into action

Legislators are responding quickly after teens used AI to create nonconsensual sexually explicit images.

A controversial US surveillance program is up for renewal. Critics are speaking out.

Here's what you need to know.

Meta is giving researchers more access to Facebook and Instagram data

There’s still so much we don’t know about social media’s impact. But Meta president of global affairs Nick Clegg tells MIT Technology Review that he hopes new tools the company just released will start to change that.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.