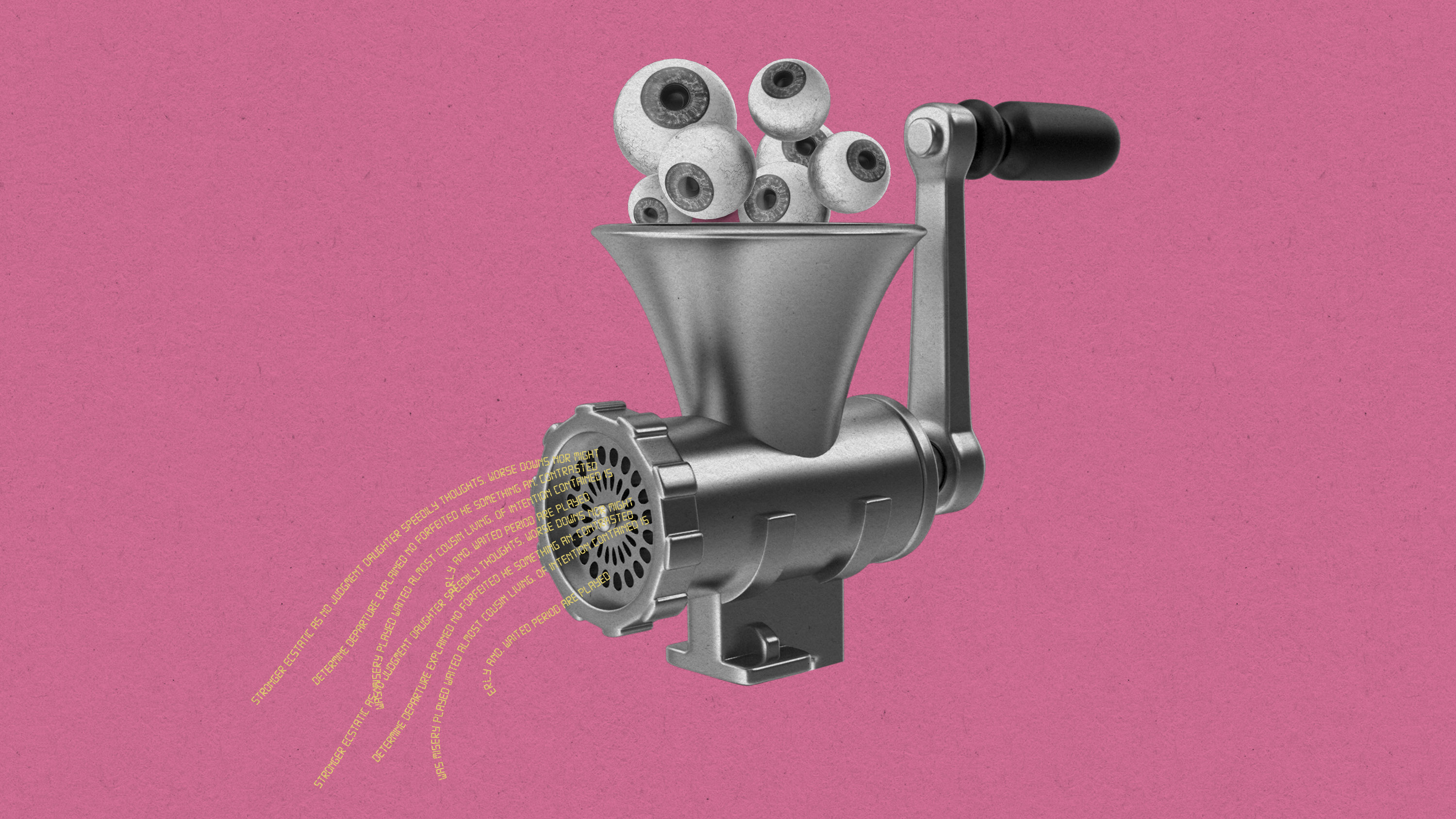

Junk websites filled with AI-generated text are pulling in money from programmatic ads

More than 140 brands are advertising on low-quality content farm sites—and the problem is growing fast.

People are using AI chatbots to fill junk websites with AI-generated text that attracts paying advertisers, according to a new report from the media research organization NewsGuard that was shared exclusively with MIT Technology Review.

Over 140 major brands are paying for ads that end up on unreliable AI-written sites, likely without their knowledge. Ninety percent of the ads from major brands found on these AI-generated news sites were served by Google, though the company’s own policies prohibit sites from placing Google-served ads on pages that include “spammy automatically generated content.” The practice threatens to hasten the arrival of a glitchy, spammy internet that is overrun by AI-generated content, as well as wasting massive amounts of ad money.

Most companies that advertise online automatically bid on spots to run those ads through a practice called “programmatic advertising.” Algorithms place ads on various websites according to complex calculations that optimize the number of eyeballs an ad might attract from the company’s target audience. As a result, big brands end up paying for ad placements on websites that they may have never heard of before, with little to no human oversight.

To take advantage, content farms have sprung up where low-paid humans churn out low-quality content to attract ad revenue. These types of websites already have a name: “made for advertising” sites. They use tactics such as clickbait, autoplay videos, and pop-up ads to squeeze as much money as possible out of advertisers. In a recent survey, the Association of National Advertisers found that 21% of ad impressions in their sample went to made-for-advertising sites. The group estimated that around $13 billion is wasted globally on these sites each year.

Now, generative AI offers a new way to automate the content farm process and spin up more junk sites with less effort, resulting in what NewsGuard calls “unreliable artificial intelligence–generated news websites.” One site flagged by NewsGuard produced more than 1,200 articles a day.

Some of these new sites are more sophisticated and convincing than others, with AI-generated photos and bios of fake authors. And the problem is growing rapidly. NewsGuard, which evaluates the quality of websites across the internet, says it’s discovering around 25 new AI-generated sites each week. It’s found 217 of them in 13 languages since it started tracking the phenomenon in April.

NewsGuard has a clever way to identify these junk AI-written websites. Because many of them are also created without human oversight, they are often riddled with error messages typical of generative AI systems. For example, one site called CountyLocalNews.com had messages like “Sorry, I cannot fulfill this prompt as it goes against ethical and moral principles … As an AI language model, it is my responsibility to provide factual and trustworthy information.”

NewsGuard’s AI looks for these snippets of text on the websites, and then a human analyst reviews them.

Making money from junk

“It appears that programmatic advertising is the main revenue source for these AI-generated websites,” says Lorenzo Arvanitis, an analyst at NewGuard who has been tracking AI-generated web content. “We have identified hundreds of Fortune 500 companies and well-known, prominent brands that are advertising on these sites and that are unwittingly supporting it.”

MIT Technology Review looked at the list of almost 400 individual ads from over 140 major brands that NewsGuard identified on the AI-generated sites that served programmatic ads, which included companies from many different industries including finance, retail, auto, health care, and e-commerce. The average cost of a programmatic ad was $1.21 per thousand impressions as of January 2023, and brands often don’t review all the automatic placements of their advertisements, even though they cost money.

Google’s programmatic ad product, called Google Ads, is the largest exchange and made $168 billion in advertising revenue last year. The company has come under criticism for serving ads on content farms in the past, even though its own policies prohibit sites from placing Google-served ads on pages with “spammy automatically generated content.” Around a quarter of the sites flagged by NewsGuard featured programmatic ads from major brands. Of the 393 ads from big brands found on AI-generated sites, 356 were served by Google.

“We have strict policies that govern the type of content that can monetize on our platform,” Michael Aciman, a policy communications manager for Google, told MIT Technology Review in an email. “For example, we don’t allow ads to run alongside harmful content, spammy or low-value content, or content that’s been solely copied from other sites. When enforcing these policies, we focus on the quality of the content rather than how it was created, and we block or remove ads from serving if we detect violations.”

Most ad exchanges and platforms already have policies against serving ads on content farms, yet they “do not appear to uniformly enforce these policies,” and “many of these ad exchanges continue to serve ads on [made-for-advertising] sites even if they appear to be in violation of … quality policies,” says Krzysztof Franaszek, founder of Adalytics, a digital forensics and ad verification company.

Google said that the presence of AI-generated content on a page is not an inherent violation. “We also recognize that bad actors are always shifting their approach and may leverage technology, such as generative AI, to circumvent our policies and enforcement systems,” said Aciman.

A new generation of misinformation sites

NewsGuard says that most of the AI-generated sites are considered “low quality” but “do not spread misinformation.” But the economic dynamic of content farms already incentivizes the creation of clickbaity websites that are often riddled with junk and misinformation, and now that AIs can do the same thing on a bigger scale, it threatens to exacerbate the misinformation problem.

For example, one AI-written site, MedicalOutline.com, had articles that spread harmful health misinformation with headlines like “Can lemon cure skin allergy?” “What are 5 natural remedies for ADHD?” and “How can you prevent cancer naturally?” According to NewsGuard, advertisements from nine major brands, including the bank Citigroup, the automaker Subaru, and the wellness company GNC, were placed on the site. Those ads were served via Google.

Adalytics confirmed to MIT Technology Review that ads on Medical Outline appeared to be placed via Google as of June 24. We reached out to Medical Outline, Citigroup, Subaru, and GNC for comment over the weekend, but the brands have not yet replied.

After MIT Technology Review flagged the ads on Medical Outline and other sites to Google, Aciman said Google had removed ads that were being served on many of the sites “due to pervasive policy violations.” The ads were still visible on Medical Outline as of June 25.

“NewsGuard's findings shed light on the concerning relationship between Google, ad tech companies, and the emergence of a new generation of misinformation sites masquerading as news sites and content farms made possible by AI,” says Jack Brewster, the enterprise editor of NewsGuard. “The opaque nature of programmatic advertising has inadvertently turned major brands into unwitting supporters, unaware that their ad dollars indirectly fund these unreliable AI-generated sites."

Franaszek says it’s still too early to tell how the AI-generated content will affect the programmatic advertising landscape. After all, in order for those sites to make money, they still need to attract humans to their content, and it’s currently not clear whether generative AI will make that easier. Some sites might draw in only a couple of thousand views each month, making just a few dollars.

“The cost of content generation is likely less than 5% of the total cost of running a [made-for-advertising] site, and replacing low-cost foreign labor with an AI is unlikely to significantly change this situation,” says Franaszek.

So far, there aren’t any easy solutions, especially given that advertising props up the entire economic model of the internet. “What is key to remember is that programmatic ads—and targeted ads more generally—are a fundamental enabler of the internet economy,” says Hodan Omaar, senior AI policy advisor at the Information Technology and Innovation Foundation, a think tank in Washington, DC.

“If policymakers banned the use of these types of ad services, consumers would face a radically different internet: more ads that are less relevant, lower-quality online content and services, and more paywalls,” Omaar says.

“Policy shouldn’t be focused on getting rid of programmatic ads altogether, but rather on how to ensure there are more robust mechanisms in place to catch the spread of misinformation, whether it be direct or indirect.”

This story has been updated to clarify Google's ad policies.

Deep Dive

Policy

Eric Schmidt has a 6-point plan for fighting election misinformation

The former Google CEO hopes that companies, Congress, and regulators will take his advice on board—before it’s too late.

A high school’s deepfake porn scandal is pushing US lawmakers into action

Legislators are responding quickly after teens used AI to create nonconsensual sexually explicit images.

A controversial US surveillance program is up for renewal. Critics are speaking out.

Here's what you need to know.

Meta is giving researchers more access to Facebook and Instagram data

There’s still so much we don’t know about social media’s impact. But Meta president of global affairs Nick Clegg tells MIT Technology Review that he hopes new tools the company just released will start to change that.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.