Are you ready to be a techno-optimist again?

In 2001, we picked our first annual set of 10 breakthrough technologies. Here’s what their fates tell us about progress over the last two decades.

Twenty years ago, MIT Technology Review picked 10 emerging areas of innovation that we promised would “change the world.” It was a time of peak techno-optimism. Yes, the dot-com boom was in the midst of imploding; some insiders were already fretting about the end of Moore’s Law. (They still are, though the industry keeps finding ways to make computers more powerful.) But in many ways it was a glorious time for science and technology.

A working draft of the human genome was published in February of 2001—a genetic blueprint that promised to reveal our deepest biological secrets. There was great excitement over recent breakthroughs in nanotechnology. Early advances in quantum and molecular computing portended a new, post–Moore’s Law era of computation. And then there was that amazing search engine with the funny name, rapidly gaining users and changing how they surfed the web and accessed information. Feeling lucky?

So it’s worthwhile to look back at the initial “TR10,” as we now call our annual list, for clues to just how much progress we’ve made.

First, let’s acknowledge that it was a thoughtful list. We eschewed robotic exoskeletons and human cloning, as well as molecular nanomanufacturing and the dreaded gray goo of the nano doomsayers—all hot topics of the day. Instead we focused on fundamental advances in information technology, materials, and biotech. Most of the technologies are still familiar: data mining, natural-language processing, microfluidics, brain-machine interfaces, biometrics (like facial recognition), and robot design.

So how well did these technologies fulfill the dreams we had for them two decades ago? Here are a few lessons from the 2001 list.

Lesson 1:

Progress is often slow

Our first selection, brain-machine interfaces, begins with a description of the neuroscientist Miguel Nicolelis recording the electric signals from the brain of a very cute owl monkey named Belle as she thinks about how to get a few drops of apple juice. Flash forward to late summer 2020, as Elon Musk shows off the brain signals from a very cute pig named Gertrude, gaining oohs and ahhs from adoring fans attending the demonstration for Neuralink, his brain-machine startup.

An observer at Musk’s event might have been forgiven for wondering whether 20 years had really passed since Nicolelis’s experiment. Both men had similar visions for directly connecting the brain to computing devices via implanted chips. As our biomedicine editor, Antonio Regalado, wrote in 2001, “Nicolelis sees the effort as part of the impending revolution that could eventually make [brain interfaces] as common as Palm Pilots.”

That claim has come true, but thanks only to the demise of Palm Pilots, not the popularity of brain-machine interfaces. Despite some encouraging human experiments over the years, such interfaces remain a scientific and medical oddity. As it turns out, neuroscience is very difficult. There has been success in shrinking the electronics and making the implants wireless, but progress in the science has been slower, hindering the visions Nicolelis and Musk hoped to realize. (A footnote to lesson one: success often depends on whether a series of advances can all come together. Making brain interfaces practical requires advances in both the science and the gadgetry.)

Lesson 2:

Sometimes it takes a crisis

We chose microfluidics in 2001 because of some remarkable advances in moving tiny amounts of biological samples around on a small device—a so-called lab-on-a-chip. These promised quick diagnostic tests and the ability to automate drug and genomic experiments.

Since then, microfluidics has found valuable uses in biology research. Clever advances continued, such as ultra-cheap and easy-to-use paper diagnostic tests (“Paper Diagnostics” was a TR10 in 2009). But the field has fallen short of its promise of transforming testing. There simply wasn’t an overwhelming demand for the technology. It’s fair to say that microfluidics became a scientific backwater.

Covid-19 ended that. Conventional tests rely on multistep procedures done in an analytical lab; this is expensive and slow. Suddenly, there is an appetite for a fast and cheap lab-on-a-chip solution. It took a few months for researchers to dust off the technology, but now covid-19 diagnostics using microfluidics are appearing. These techniques, including one that uses CRISPR gene editing, promise to make covid tests far more accessible and widely used.

Lesson 3:

Be careful what you wish for

In 2001, Joseph Atick, one of the pioneers of biometrics, saw facial recognition as a way for people to interface with their gadgets and computers more securely and easily. It would give the cell phones and personal digital assistants that were increasingly popular a way to recognize their owners, spelling the end of PINs and passwords. Part of that vision eventually came true with such applications as Apple’s FaceID. But facial recognition also took a turn that Atick now says “shocks me.”

In 2001, facial-recognition algorithms were limited. They required instructions from humans, in mathematical form, on how to identify the distinguishing features of a face. And every face in the database of faces to be recognized had to be laboriously scanned into the software.

Then came the boom in social media. Whereas in the early days, Atick says, he would have been thrilled with 100,000 images in facial-recognition databases, suddenly machine-learning algorithms could be trained on billions of faces, scraped from Facebook, LinkedIn, and other sites. There were now hundreds of these algorithms, and they trained themselves, simply by ingesting and comparing images—no expert human help required.

But that remarkable advance came with a trade-off: no one really understands the reasoning the machines use. And that’s a problem now that facial recognition is increasingly relied on for sensitive tasks like identifying criminal suspects. “I did not envision a world where these machines would take over and make decisions for us,” says Atick.

Lesson 4:

The trajectory of progress matters

“Hello again, Sidney P. Manyclicks. We have recommendations for you. Customers who bought this also bought …”

The recommendation engines described in this, the opening of our 2001 article on data mining, seemed impressive at the time. Another potential use of data mining circa 2001 also sounded thrilling: computer-searchable video libraries. Today, it all seems utterly mundane.

Thanks to ever increasing computational power, the exploding size of databases, and closely related advances in artificial intelligence, data mining (the term is now often interchangeable with AI) rules the business world. It’s the lifeblood of big tech companies, from Google and its subsidiary YouTube to Amazon and Facebook. It powers advertising and, yes, sales of everything from shoes to insurance, using personalized recommendation engines.

Have these technologies made our lives not just more convenient, but better in ways that we care about?

Yet these great successes mask an underlying failure that became particularly evident during the pandemic. We have not exploited the power of big data in areas that matter most.

At almost every step, from the first signs of the virus to testing and hospitalization to the rollout of vaccines, we’ve missed many opportunities to gather data and mine it for critical information. We could have learned so much more about how the virus spreads, how it evolves, how to treat it, and how to allocate resources, potentially saving countless lives. We didn’t seem to have a clue about how to collect the data we needed.

Overall, then, the 10 technologies we picked in 2001 are still relevant; none has been forsaken; and some have been remarkable, even world-changing, successes. But the real test of progress is more difficult: Have these technologies made our lives not just more convenient, but better in ways that we care about? How do we measure that progress?

What makes you happy?

The common way to gauge economic progress is by measuring gross domestic product (GDP). It was formulated in the 1930s in the US to help us understand how well the economy was recovering from the Great Depression. And though one of its chief architects, Simon Kuznets, warned that GDP shouldn’t be mistaken for a measure of the country’s well-being and the prosperity of its people, generations of economists and politicians have done just that, scrutinizing GDP numbers for clues to the health of the economy and even the pace of technological progress.

Economists can tease out what they call total factor productivity (TFP) from GDP statistics; it’s basically a measure of how much innovation contributes to growth. In theory, new inventions should increase productivity and cause the economy to grow faster. Yet the picture has not been great over the last two decades. Since around the mid-2000s—shortly after our first TR10 list—growth in TFP has been sluggish and disappointing, especially given the flood of new technologies coming from places like Silicon Valley.

Some economists think the explanation may be that our innovations are not as far-reaching as we think. But it’s also possible that GDP, which was designed to measure the industrial production of the mid-20th century, does not account for the economic benefits of digital products, especially when they’re free to use, like search engines and social media.

Stanford economist Erik Brynjolfsson and his colleagues have created a new measure to try to capture the contribution of these digital goods. Called GDP-B (the “B” is for benefits), it is calculated by using online surveys to ask people just how much they value various digital services. What would you have to be paid, for example, to live a month without Facebook?

The calculations suggest that US consumers have gained some $225 billion in uncounted value from Facebook alone since 2004. Wikipedia added $42 billion. Whether GDP-B could fully account for the seeming slowdown in productivity is uncertain, but it does provide evidence that many economists and policymakers may have undervalued the digital revolution. And that, says Brynjolfsson, has important implications for how much we should invest in digital infrastructure and prioritize certain areas of innovation.

GDP-B is one of a larger set of efforts to find statistics that more accurately reflect the changes we care about. The idea is not to throw out GDP, but to complement it with other metrics that more broadly reflect what we might call “progress.”

Another such measure is the Social Progress Index, which was created by a pair of economists, MIT’s Scott Stern and Harvard’s Michael Porter. It collects data from 163 countries on factors including environmental quality, access to health care and education, traffic deaths, and crime. While wealthier countries, unsurprisingly, tend to do better on this index, Stern says the idea is to look at where social progress diverges from GDP per capita. That shows how some countries, even poor ones, are better than others at turning economic growth into valued social changes.

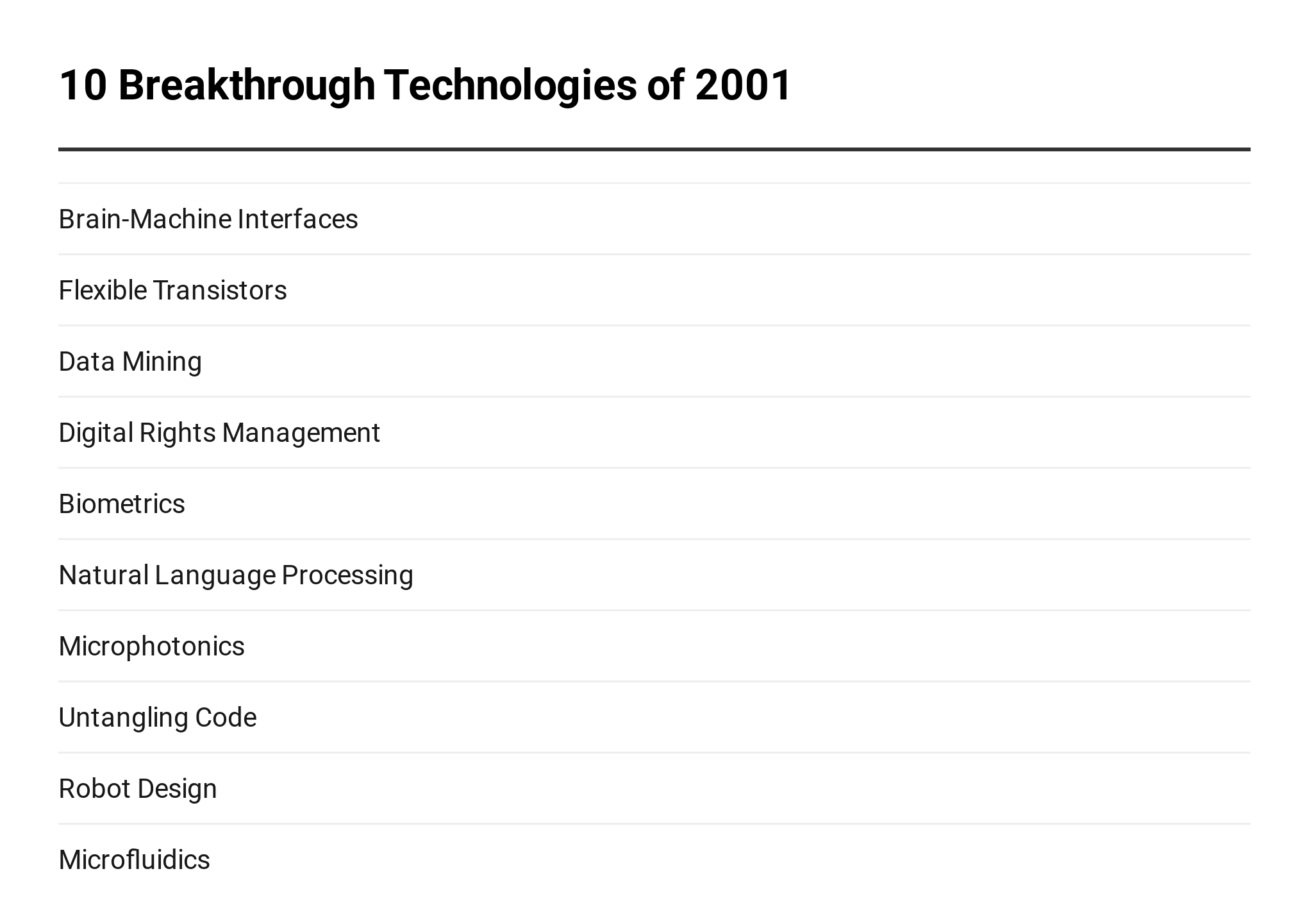

Survey from 13 countries shows generation gap

"Imagining when the covid-19 pandemic is over ... which should your country prioritize more?"

The US, with one of the world’s highest levels of GDP per capita, is 28th in the index, and is one of only four countries whose scores have declined since 2014. Norway, which is similarly wealthy, was ranked first in 2020 (see chart below). Some poorer countries also outperform.

“Very often the decisions about innovation and technology are about its economic impact,” says Stern. “There’s nothing wrong with that. But are we directing the economic rewards to areas that will advance social progress?”

A similar thought lies behind another alternative to GDP, developed by Diane Coyle and her colleagues at the Bennett Institute for Public Policy in Cambridge, UK. Their measure of what they call the wealth economy is based on what they define as the assets of a society, including its human capital (the health and skills of its people), natural capital (its resources and the health of the environment), and social capital (trust and social cohesion).

It’s a hugely ambitious project that attempts to create a couple of key measurements for each asset. Those numbers, says Coyle, are meant to inform better decisions about technology and innovation, including decisions on the priorities for government investment. She says the approach allows you to ask, “What is the technology doing for people?”

The value of these various alternatives to GDP is that they provide a broader picture of how our lives are changing as a result of technology. Had they been in place 20 years ago, they might have shined light on crises we were late in seeing, such as the growth of income inequality and the rapid deterioration of our climate. If 20 years ago was a time of peak techno-optimism, it might have prompted us to ask, “Optimism about what?”

Born-again hope

About a decade ago, the techno-optimist narrative began to fall apart.

In 2011 Tyler Cowen, an economist at George Mason University in Virginia, wrote The Great Stagnation, arguing that the technologies that seemed so impressive at the time—especially social media and smartphone apps—were doing little to stimulate economic growth and improve people’s lives. The Rise and Fall of American Growth, a 2016 bestseller by Robert Gordon, another prominent economist, ran to more than 700 pages, detailing the reasons for the slowdown in TFP after 2004. The temporary boom from the internet, he declared, was over.

The books helped kick off an era of techno-pessimism, at least among economists. And in the last few years, problems like misinformation on social media, the precarious livelihoods of gig-economy workers, and the creepier uses of data mining have fueled a broader pessimist outlook—a sense that Big Tech not only isn’t making society better but is making it worse.

These days, however, Cowen is returning to the optimist camp. He’s calling for more research to explain progress and how to create it, but he says it’s “a more positive story” than it was a few years ago. The apparent success of covid vaccines based on messenger RNA has him excited. So do breakthroughs in using AI to predict protein folding, the powerful gene-editing tool CRISPR, new types of batteries for electric vehicles, and advances in solar power.

“What is the technology doing for people?”

Diane Coyle

An anticipated boom in funding from both governments and businesses could amplify the impact of these new technologies. President Joe Biden has pledged hundreds of billions in infrastructure spending, including more than $300 billion over the next four years for R&D. The EU has its own massive stimulus bill. And there are signs of a new round of venture capital investments, especially targeting green tech.

If the techno-optimists are right, then our 10 breakthrough technologies for 2021 could have a bright future. The science behind mRNA vaccines could open a new era of medicine in which we manipulate our immune system to transform cancer treatment, among other things. Lithium-metal batteries could finally make electric cars palatable for millions of consumers. Green hydrogen could help replace fossil fuels. The advances that made GPT-3 possible could lead to literate computers as the next big step in artificial intelligence.

Still, the fate of the technologies on the 2001 list tells us that progress won’t happen just because of the breakthroughs themselves. We will need new infrastructure for green hydrogen and electric cars; new urgency for mRNA science; and new thinking around AI and the opportunities it presents in solving social problems. In short, we need political will.

But the most important lesson from the 2001 list is the simplest: Whether these breakthroughs fulfill their potential depends on how we choose to use them. And perhaps that’s the greatest reason for renewed optimism, because by developing new ways of measuring progress, as economists like Coyle are doing, we can also create new aspirations for these brilliant new technologies. If we can see beyond conventional economic growth and start measuring how innovations improve the lives of as many people as possible, we have a much greater chance of creating a better world.

Keep Reading

Most Popular

The Biggest Questions: What is death?

New neuroscience is challenging our understanding of the dying process—bringing opportunities for the living.

Google DeepMind used a large language model to solve an unsolved math problem

They had to throw away most of what it produced but there was gold among the garbage.

Unpacking the hype around OpenAI’s rumored new Q* model

If OpenAI's new model can solve grade-school math, it could pave the way for more powerful systems.

10 Breakthrough Technologies 2024

Every year, we look for promising technologies poised to have a real impact on the world. Here are the advances that we think matter most right now.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.